Why Environment Isn’t Just Background Noise

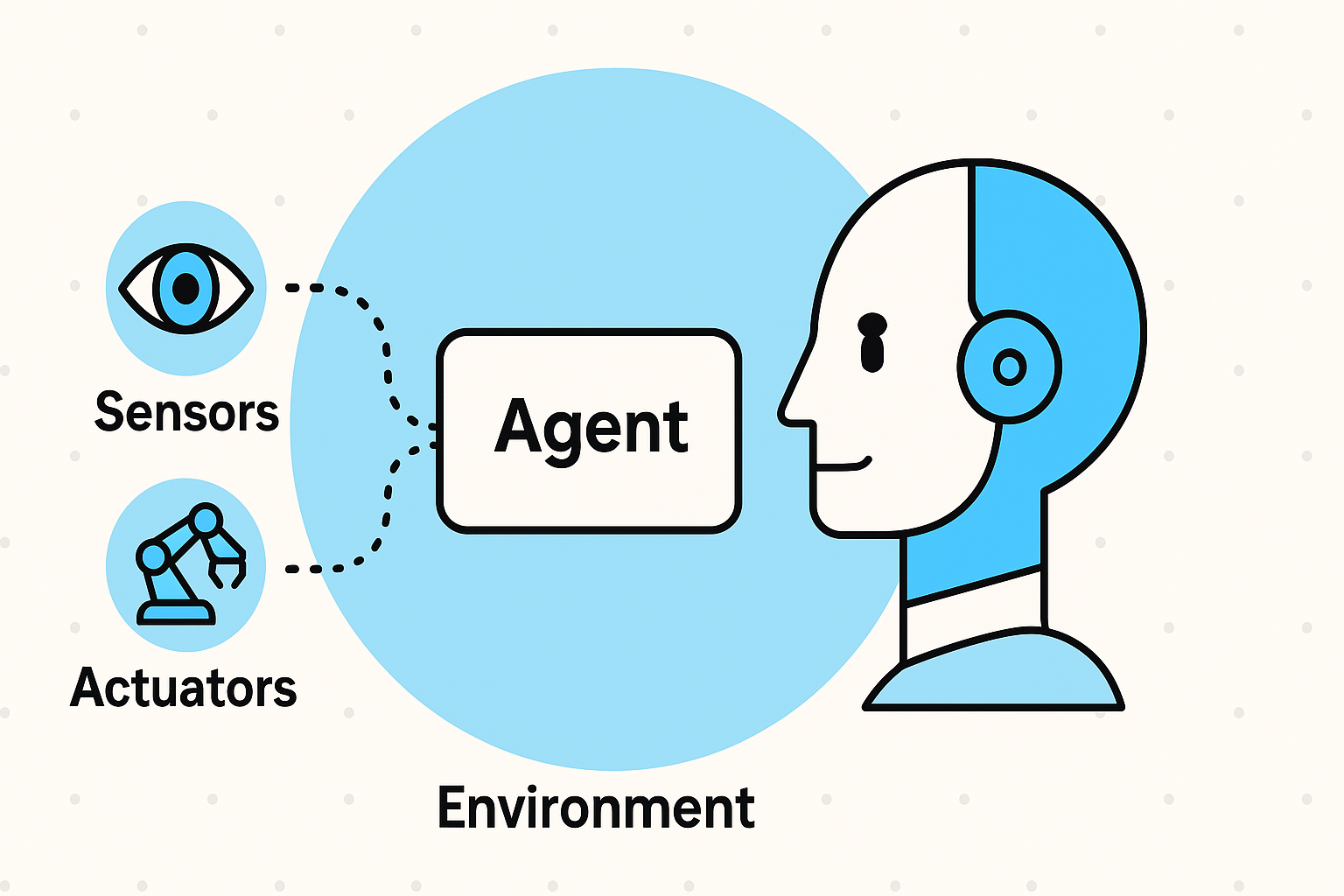

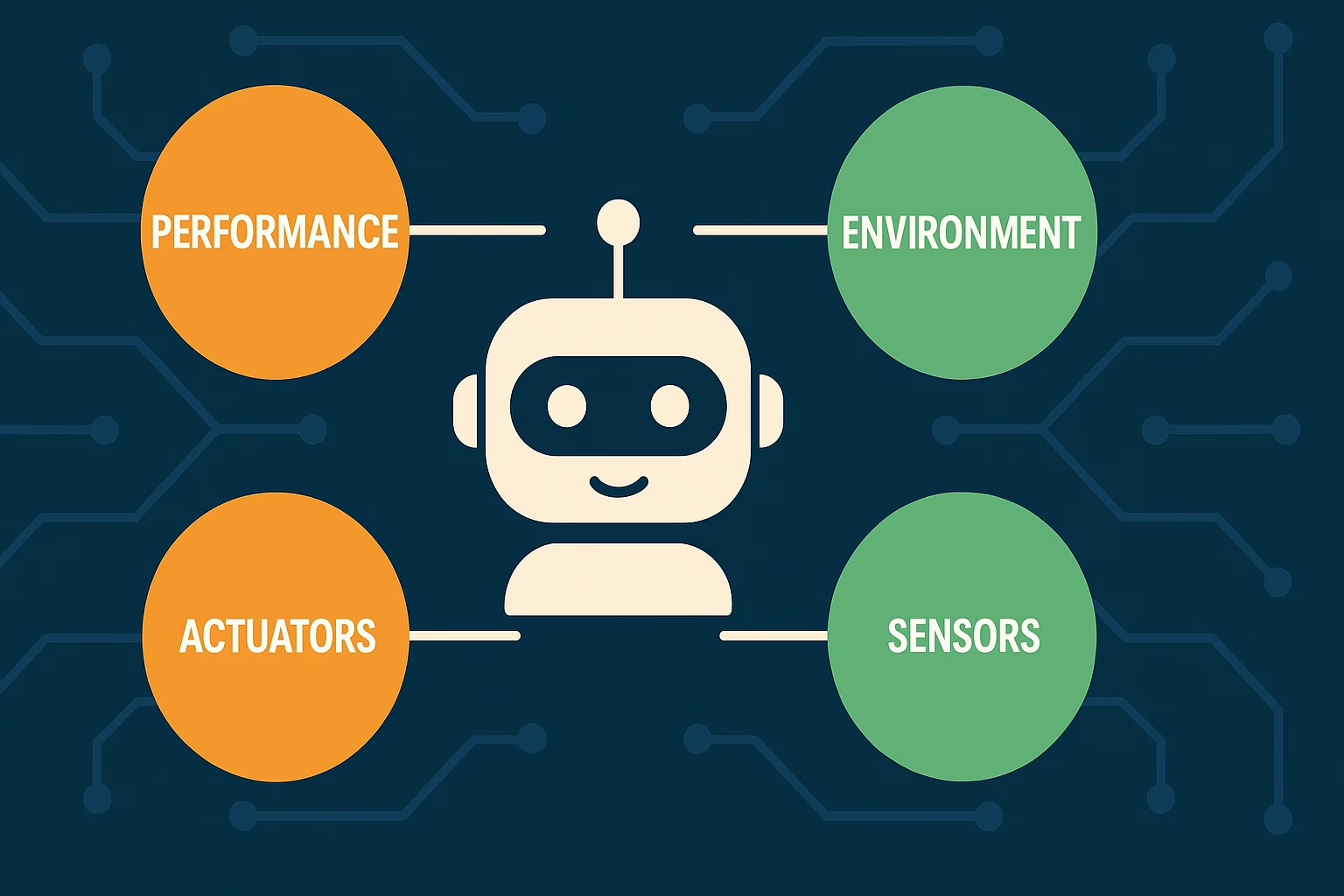

In the world of AI, the environment isn’t just where things happen—it defines how things happen.

Whether you’re building a vacuum robot or a world-conquering digital assistant (hopefully not the latter), your agent’s intelligence means nothing without understanding the world it interacts with. And that world? That’s the agent’s environment.

Let’s break down the types of environments in AI, how they work, and why they shape your agent’s brain more than you might think.

What Are Agent Environments in AI?

An agent environment is the world or setting that an AI agent operates in. It could be:

- A chessboard

- A smart home

- A warehouse

- Even the internet

Every environment has rules, information availability, and dynamics that influence how the agent behaves.

In simple terms, the agent perceives the environment through sensors, acts on it using actuators, and tries to make sense of the results. Rinse and repeat.

But not all environments are created equal. Let’s meet the types!

Fully Observable vs. Partially Observable

This one’s all about what the agent can see.

✅ Fully Observable Environments

In these, the agent has access to all relevant information at any given moment. No secrets. No guessing.

- Example: Chess.

You see the full board, every piece, and every move option. - Impact on agent design:

Simple! No need for memory or assumptions. Planning is easier.

❌ Partially Observable Environments

Here, the agent only gets partial snapshots of what’s going on. Like playing hide-and-seek with a blindfold.

- Example: Driving in fog.

Your car can’t see beyond a few feet. It must guess or predict the rest. - Impact on agent design:

Agents must maintain internal models or use sensors creatively. Reflex alone won’t cut it.

🧠 Key takeaway: Observability determines how much “thinking ahead” your agent needs to do.

Deterministic vs. Stochastic

This is about predictability.

✅ Deterministic Environments

Every action has a guaranteed outcome. You push a button, and a light turns on. No surprises.

- Example: A vending machine (assuming it’s not broken).

- Impact: Easy for agents to plan. No randomness to account for.

❌ Stochastic Environments

Here, actions come with uncertainty. You do something, but the outcome isn’t always the same.

- Example: Rolling dice in a board game.

You know the probabilities but not the result. - Impact: Agents need to evaluate probabilities and plan for multiple outcomes. This often leads to the use of utility-based agents.

💡 If your agent can’t rely on outcomes, it better be flexible.

Discrete vs. Continuous Environments

Now let’s talk numbers.

✅ Discrete Environments

These have a finite set of actions and states.

- Example: Board games like checkers or tic-tac-toe.

- Impact: Agents can enumerate all possibilities. Easier for algorithms like search trees.

❌ Continuous Environments

Everything happens on a spectrum. There’s an infinite number of possibilities.

- Example: Flying a drone.

The drone can tilt, roll, or accelerate in a smooth, continuous range. - Impact: Requires advanced control models and often uses continuous math, like calculus or reinforcement learning.

📉 In continuous environments, approximation becomes your best friend.

Static vs. Dynamic Environments

Can the world change while the agent is thinking?

✅ Static Environments

Nothing changes unless the agent acts.

- Example: A crossword puzzle.

The clues stay put until you write something. - Impact: Agents can take their sweet time to think.

❌ Dynamic Environments

Things change constantly, whether the agent acts or not.

- Example: Real-time stock trading.

The market keeps moving, no matter how long you stare at it. - Impact: Agents must be fast, reactive, and capable of real-time decision-making.

⚡ Thinking too long in a dynamic world? You’ve already lost.

Episodic vs. Sequential Environments

Let’s talk memory.

✅ Episodic Environments

Each interaction is independent. What happens now doesn’t affect what happens next.

- Example: Spam filtering in email.

One email doesn’t change the others. - Impact: Simple logic works. Great for rule-based agents.

❌ Sequential Environments

What you do now affects the future.

- Example: Playing a video game.

Saving ammo early can make or break your chances in later levels. - Impact: Agents must plan over time, think long-term, and maybe even learn from experience.

📚 Sequential = Story mode. And your agent better write a smart story.

Why These Environment Types Matter for Agent Design

Now, you might wonder: Why not just build one awesome agent and call it a day?

Because every environment demands a different kind of brain.

| Environment Trait | Agent Feature Needed |

|---|---|

| Partially Observable | Memory or internal state |

| Stochastic | Probability & risk handling |

| Continuous | Fine-tuned control systems |

| Dynamic | Fast decision-making |

| Sequential | Long-term planning |

A great agent isn’t just smart—it’s built for its world.

Final Thoughts: The Environment Builds the Mind

Designing an AI agent without understanding its environment is like sending a scuba diver into outer space.

Whether your AI lives in a game, a smart fridge, or a robot army (😅), its success depends on how well it’s tailored to its environment.

So always ask: What kind of world is my agent stepping into?

Because how your agent sees, acts, and survives depends on it.

🔗 Related Reading

👉 AI Agents Explained: How They Think, Act, and Learn

🌐 External Resource

Explore the Stanford AI Handbook for academic insights into agent design and environments.